Episode 44

From Lit Reviews to Live Evidence, and the Faculty Who Teach With It

Educators in Medicine,

In this newsletter, we continue our journey through the fundamentals of AI, its applications in medicine, and its transformative role in faculty development and education. Let’s dive into learning.

We’re at the point where “keeping up with the literature” doesn’t mean pouring another coffee and digging through PubMed until your eyes cross. AI is changing that—again. Let’s dive into three major updates worth your attention this episode.

1. Elicit: The New Lit Review on Steroids

Remember when writing a literature review meant months of poring over articles, creating endless spreadsheets, and wondering if you’d ever make it to the writing stage? Enter Elicit, a research assistant that pulls structured reviews together in minutes.

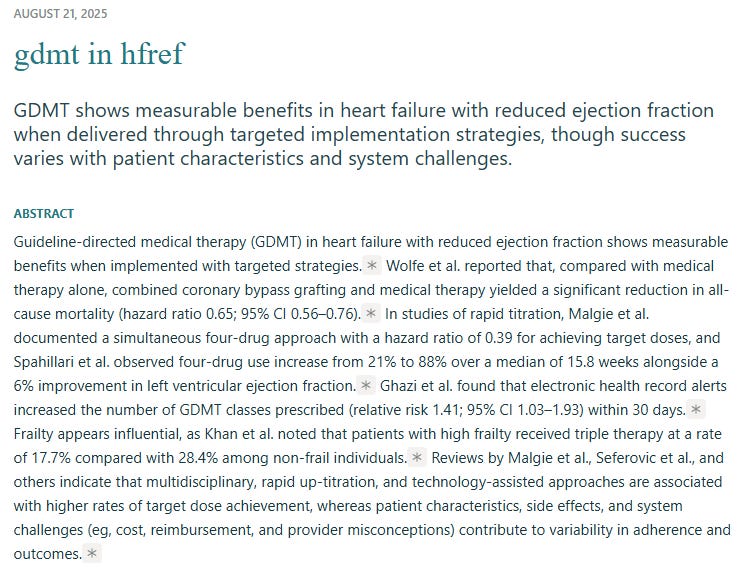

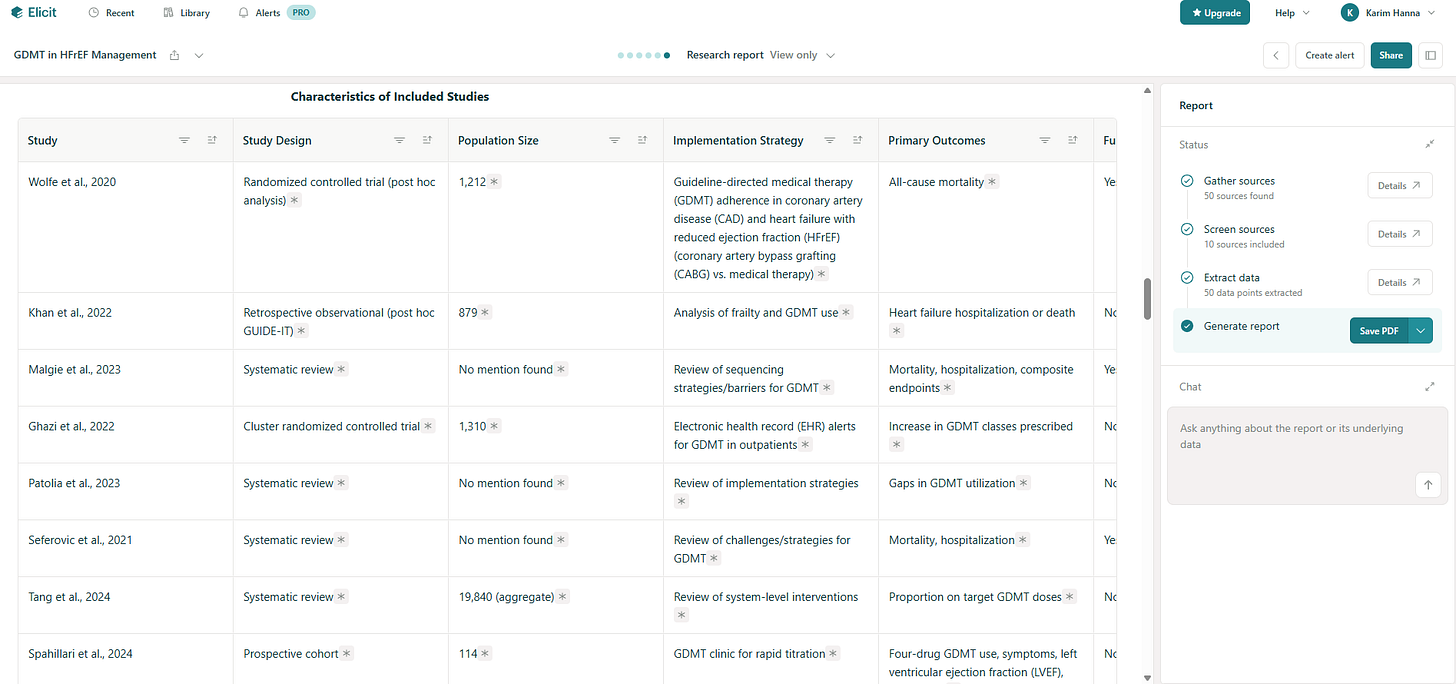

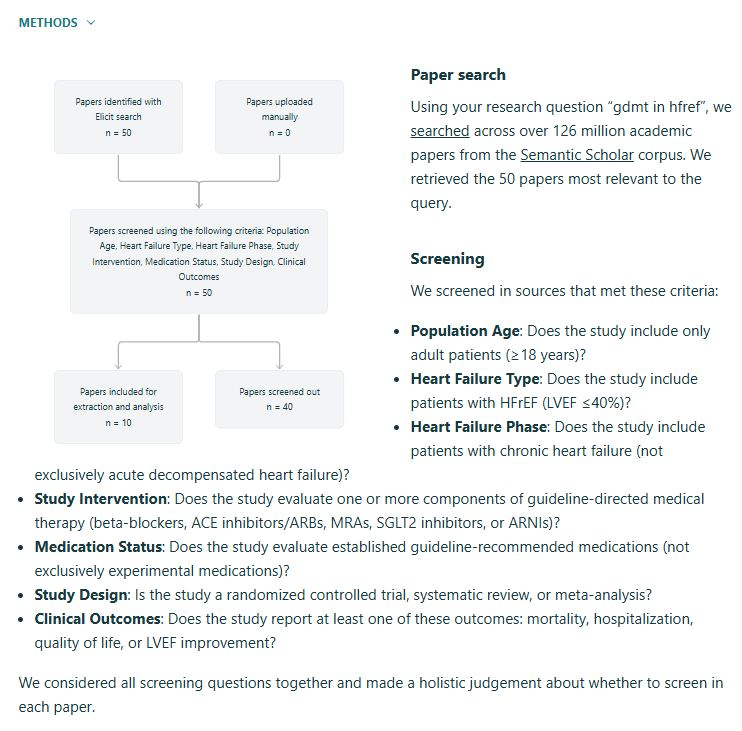

Here’s a live example I ran on the simple prompt of “GDMT in HFrEF”: see the full review with this link.

What you’ll notice:

Abstract and synthesis: Not just a list of citations, but a readable summary of what’s out there.

Methods made simple: Inclusion/exclusion criteria you can actually tweak—expand to RCTs only, narrow to adults, or toss out the small sample size noise.

Details that matter: Each study comes with a snapshot—sample size, outcomes, interventions—that feels like a built-in data extraction sheet.

The real kicker? You can build out a draft methods section for your paper that looks like it was written after weeks in EndNote, not five minutes on a Tuesday night. For med ed researchers, that means quicker drafts, cleaner grant prep, and less “I’ll get to it when life slows down” (which, let’s be honest, never happens).

If you’re not at least experimenting with AI-powered lit reviews, you’re choosing the horse-and-buggy while the rest of the world is already driving rocket ships.

2. AI Literacy in the NEJM (and Why We’re Behind)

The NEJM just published a sweeping piece on AI in clinical care: Artificial Intelligence in Medicine: A Review. It’s powerful—covering applications across specialties, ethical guardrails, and the trajectory of where we’re headed.

Here’s the summary version:

AI is already deeply embedded in imaging, documentation, and CDS.

The “next wave” is less about what the models can do and more about how clinicians can use them well.

Ethical and equity concerns aren’t side issues—they’re core.

What jumped out for me is something we’ve been saying here for months: literacy is the bottleneck. Not technical ability, not data access—just basic clinician comfort with the tools.

One of my colleagues summed it up in an email that stopped me mid-scroll:

“You taught us keystrokes—now we need to learn to animate, interface, implement. Human factor engineering.”

That’s it. We don’t need to just know the shortcuts. We need to know how to weave AI into our workflows, how to check it, how to bend it toward safer and better care. Without that, the “gap” between AI’s potential and its clinical impact is going to keep widening.

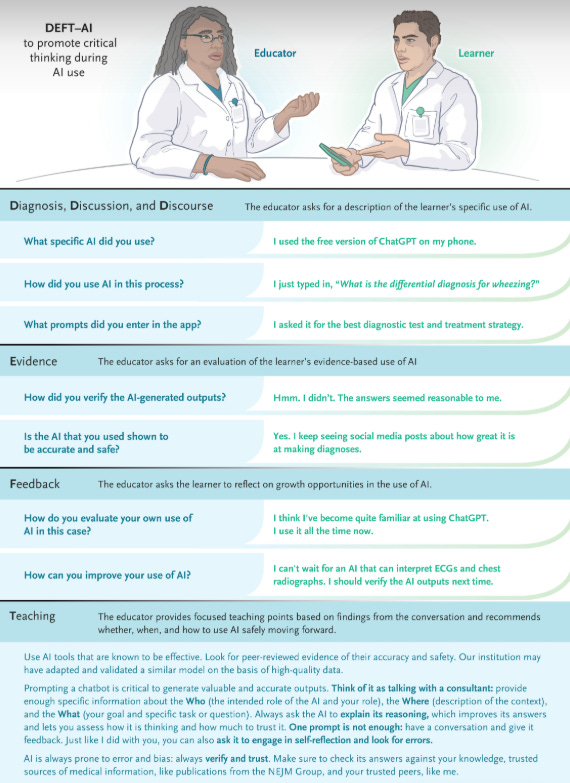

Faculty aren’t just passive consumers of AI—they’re now expected to model its use for learners. Tools like DEFT-AI give educators a structured way to practice, assess, and reflect on how they’re integrating AI into teaching. It moves the conversation from “what is AI?” to “how do I teach with AI responsibly and effectively,” which is exactly the gap the NEJM review flagged. I don’t know if we’re doing this well yet.

Read the NEJM piece. Then ask yourself—not “what can AI do?” but “what can I do with AI in my actual Tuesday morning clinic?” Reach out to me or comment on here about how you’re doing this with learners.

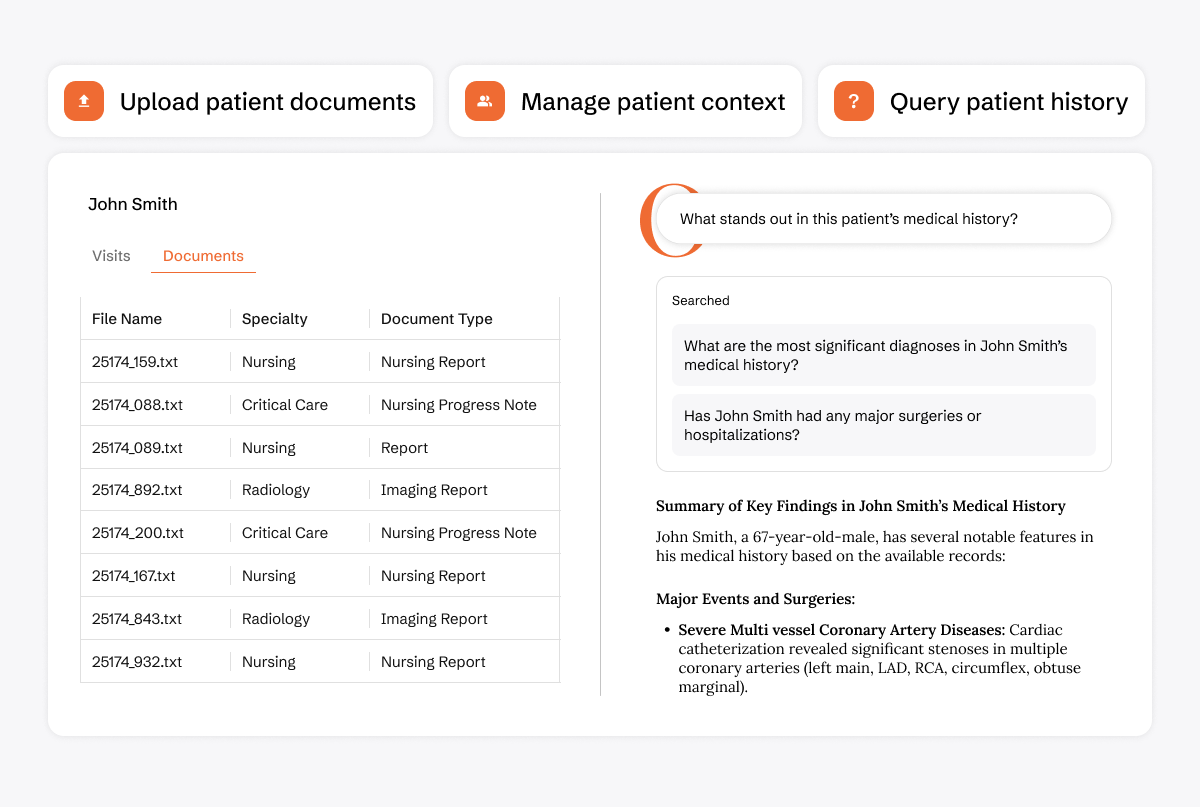

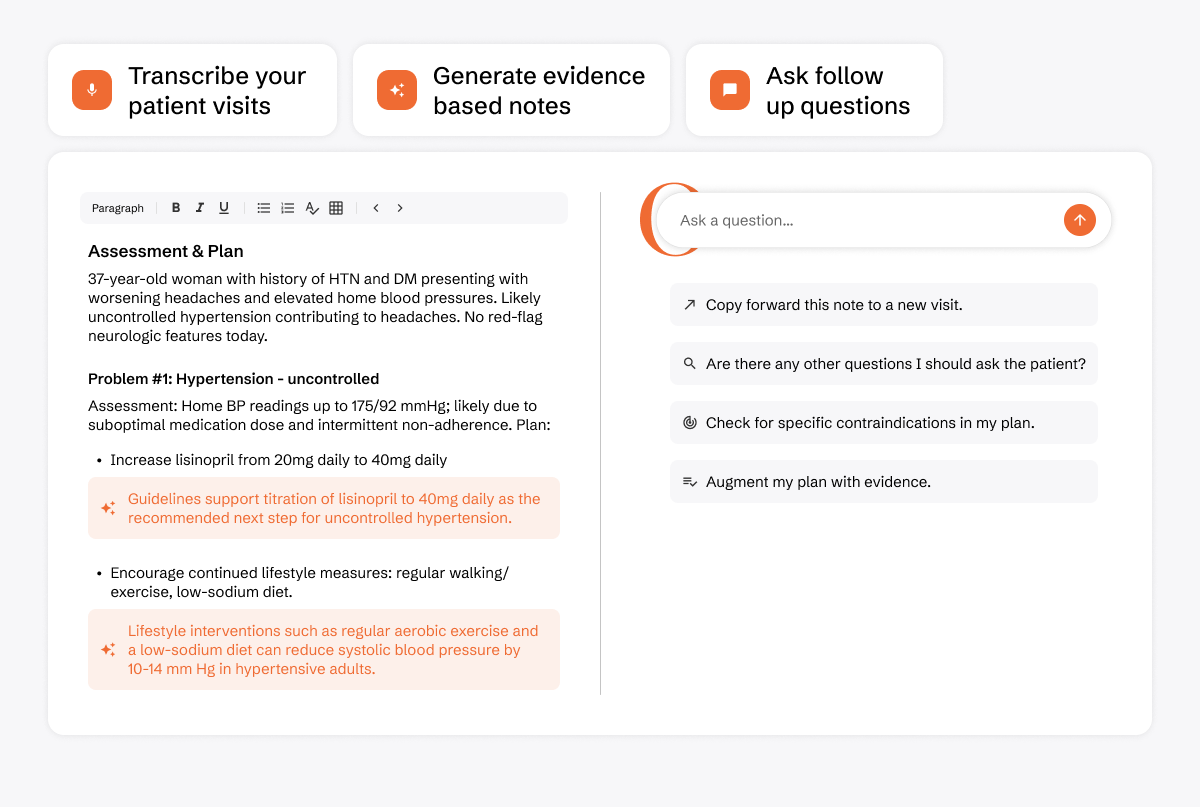

3. OpenEvidence Updates: AI in the Visit, Not Just After

The folks at OpenEvidence just rolled out a game-changer: Visits. Think of it as your personal clinical assistant that doesn’t just sit on the shelf—it lives in your note, in real time, while you’re with the patient. This was bound to happen, and OE is now making it mainstream. In my opinion, they’re the leaders in this space - and adding the ambient scribe was probably a small lift for their company, given their recent funding. Next will be EMR integration (my prediction) and we’ll be getting live, evidence-based suggestions, on the fly, while talking to patients.

Highlights:

Evidence-Integrated Visits: As you document, the system pulls in guidelines and studies relevant to your assessment and plan. That’s right—no more toggling between UpToDate, PubMed, and your EMR mid-visit.

Evidence-Backed Transcription: It transcribes, then enriches your note with the latest recs (and no, not in the clunky, half-useful way most dictation software does).

Custom Templates: Build notes that reflect your documentation style, not the vendor’s idea of what “should” work.

Patient Context Included: After the visit, you can ask OE questions directly, and it knows the patient’s history, labs, and visit details.

And the best part: it’s free for all verified US healthcare professionals. You can try it today on the web or in the app.

This is the kind of tool that bridges the research-practice gap. It’s not a shiny toy—it’s workflow-integrated intelligence.

The future of AI in medicine isn’t just about “finding the evidence.” It’s about flowing with the evidence in your clinical notes, at the point of care, in real time.

AI in MedEd isn’t just about shiny tools—it’s about literacy, workflows, and learning to interface, animate, implement. I still am a huge proponent for faculty development, if we’re not teaching with these tools, we aren’t training as well as we can. And I imagine that soon we’d be doing patients a disservice to not use them as well. From writing papers in minutes, to translating asking learners systematically on AI use during clinic, to having an assistant that sits in your notes with you—the opportunity is now.

The only real question: am I going to keep watching, or start building these tools into your own teaching and practice?

💌 As always, thanks for reading. Get in touch and let me know your thoughts!

Thank you for joining us on this adventure. Stay tuned for more AI insights, best practices, and more future editions of AI+MedEd.

For education and innovation,

Karim

Share this with someone - have them sign up here.

I used Elicit recently and it was great! super helpful for literature reviews. Also, very interesting article from the NEJM about a framework on how to assess how AI is being integrated in medical education. I wonder if UMG/GME are utilizing something like this already, or not at all.

Thank you for sharing!